AI robots have moved from science fiction to reality, thanks to advancements in machine learning, perception, and decision-making. Today, they analyze data, recognize objects, navigate complex environments, and adapt in real time. Self-learning systems use techniques like reinforcement and imitation learning, improving through experience. Onboard processing allows quick decisions without delays, making them essential in industries like manufacturing and logistics. Continue exploring to see how these self-improving machines are transforming our world.

Key Takeaways

- Modern AI robots leverage machine learning, reinforcement, and imitation techniques to autonomously improve their performance over time.

- Advanced onboard sensors and neural processing units enable real-time decision-making without cloud dependence.

- Generative AI simulates scenarios and predicts occluded objects, enhancing safety and adaptability in dynamic environments.

- Self-learning robots are increasingly used in manufacturing, logistics, and space, transforming science fiction concepts into practical applications.

- Challenges such as training speed, data needs, and safety assurance are driving ongoing innovations toward fully autonomous systems.

Artificial intelligence is transforming robots into self-learning systems capable of adapting to complex environments and tasks. You’ll find that modern robots don’t just follow pre-programmed instructions anymore; they analyze data, learn from experience, and improve their performance over time. Technologies like machine learning enable these machines to get better as they process more information, allowing them to handle unpredictable situations with increasing accuracy. For example, object detection and recognition help robots identify objects, people, vehicles, or animals, ensuring safe operation in shared spaces. This capability is essential for tasks like warehouse automation, where robots scan stock in real time and flag anomalies using advanced AI vision systems.

AI-driven robots analyze data, learn from experience, and adapt in real time for safer, more efficient automation.

Navigation systems are equally sophisticated. Adaptive navigation allows robots to reroute when obstacles appear or environmental conditions change, making their movement more efficient and reliable. Predictive decision-making, driven by machine learning, helps them recognize patterns and adjust behaviors accordingly, whether in a factory, a hospital, or even space. Task optimization further enhances their efficiency by intelligently allocating resources, planning paths, or sequencing tasks to minimize downtime and maximize productivity. These systems rely on a blend of sensors—LiDAR, 3D cameras, ultrasonic detectors, and internal sensors—that gather detailed environmental data, enabling robots to interpret their surroundings accurately. Additionally, real-time data processing is vital for maintaining safety and operational efficiency in dynamic environments.

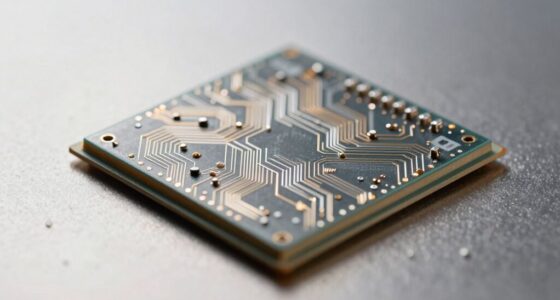

Learning methods like reinforcement learning and imitation learning form the backbone of self-improvement. Reinforcement learning trains robots through trial and error, rewarding successful actions and penalizing mistakes, whether in simulation or real-world settings. Imitation learning mimics expert demonstrations, allowing robots to learn complex behaviors from human or virtual mentors. This continual feedback loop of experiences helps robots adapt to new situations, much like a child learning to balance. Meanwhile, multimodal AI models combine perception, reasoning, and action, using large language models as a backbone to integrate various inputs seamlessly. Recent advancements in hardware acceleration, such as specialized neural processing units, enable faster processing of complex algorithms, allowing real-time decision-making even in demanding environments.

Generative AI plays an important role by creating synthetic scenarios for training, predicting occluded objects, and producing realistic sensor data for testing. Onboard neural processing units enable real-time decision-making without relying on cloud connectivity, which reduces latency and enhances safety-critical responses. Examples abound: autonomous mobile robots in warehouses recognize and adapt to rearranged shelves; SymBot manages high-density pallet handling with precision; Tesla’s neural networks continually learn to improve vehicle automation.

All these advancements make self-learning robots more capable and versatile. They’re increasingly deployed across industries, from manufacturing to logistics and space exploration. Although challenges remain—such as training speed, data requirements, and ensuring safety—the progress toward fully autonomous, self-improving robots is undeniable. As these systems evolve, you’ll see them become more intuitive, adaptable, and essential to everyday operations, turning science fiction into reality.

Frequently Asked Questions

How Do AI Robots Ensure Safety During Autonomous Learning?

You guarantee safety during autonomous learning by integrating strict safety protocols and real-time monitoring systems. AI robots use sensors and computer vision to detect obstacles and hazards instantly, while reinforcement learning incorporates reward structures that prioritize safe behaviors. Additionally, they operate within controlled environments or simulation before real-world deployment, and onboard processing helps make split-second decisions, reducing risks and ensuring safe interactions with humans and surroundings.

Can AI Robots Experience Emotions or Consciousness?

You might wonder if AI robots can truly experience emotions or consciousness. Currently, they can’t genuinely feel or be aware like humans. They simulate emotional responses based on programming and data patterns, but lack subjective experience. Their actions are driven by algorithms and learned behaviors, not true feelings. So, while they can mimic emotions, they don’t possess consciousness or emotional awareness in the way humans do.

What Are the Ethical Concerns With Self-Learning Robots?

You should consider that self-learning robots raise ethical concerns like loss of human control, unpredictability, and safety risks. As you develop or work with these AI systems, guarantee transparency in their decision-making and set clear boundaries. You must also think about accountability for mistakes and the potential for bias. Prioritizing safety, privacy, and moral responsibility helps prevent harm and builds trust in autonomous robotics.

How Do AI Robots Handle Unpredictable Real-World Scenarios?

You might think AI robots struggle with unpredictability, but they handle it well through advanced learning methods like reinforcement and continuous learning. They adapt by analyzing real-world data, updating their behaviors, and making decisions in real time. While they’re not perfect, ongoing improvements in perception, reasoning, and onboard processing help them react swiftly to unexpected situations, making them more reliable in dynamic environments.

What Is the Future of Human-Robot Collaboration in Learning?

You can expect human-robot collaboration in learning to become more seamless and adaptive. Robots will learn from your demonstrations and feedback, continuously improving their skills in real time. As AI advances, they’ll better understand your intentions, communicate naturally, and work alongside you safely. This collaboration will boost productivity, foster innovation, and create new opportunities for shared growth, making robots more intuitive partners in both work and daily life.

Conclusion

As you see, AI robots that learn independently are transforming our world. Did you know that by 2030, it’s estimated that over 75 billion IoT devices, including intelligent robots, will be in use? This shows how rapidly AI learning capabilities are expanding, making autonomous robots more integrated into daily life. Embracing this technology means you’re part of a future where machines adapt and improve themselves, opening endless possibilities and reshaping what’s possible.